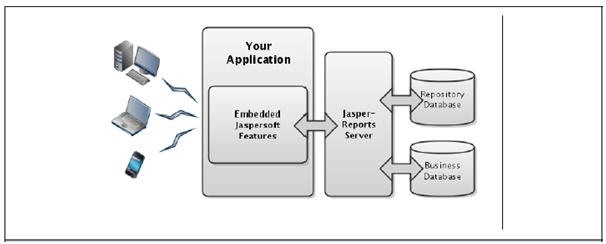

Embedding BI is the process of adding rich data visualization and manipulation to an application by leveraging Jaspersoft’s BI suite in the design and coding of an application.

All of the following can be achieved by embedding Jaspersoft BI:

1. Reports that run on demand with output in your application or delivered in a file.

2. A repository of reports with secure role-based access, scheduling, and email delivery.

3. Interactive reports and dashboards displayed in your application.

4. Self-service ad-hoc reporting and advanced analytics integrated into your application.

Based on typical user scenarios and experience with numerous real-world implementations, Jaspersoft has identified five levels of embedded BI functionality:

Level 1: Embedding of Static Reporting.

Level 2: Embedding of Managed Interactive Reports.

Level 3: Embedding of Highly Interactive Reports and Dashboards.

Level 4: Embedding of Self-Service Reporting and Ad-hoc views.

Level 5: Embedding of Advanced Analytics.

Level 1: Embedding of Static Reporting

The Jaspersoft embedded BI solution for level 1 is implemented straightforwardly with the APIs of the JasperReports Library. Using this library, applications can programmatically define a data source, use it to fill and display a report, and then export the report in any number of formats.

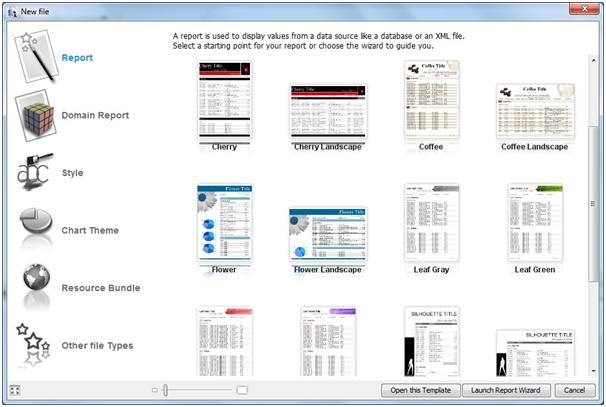

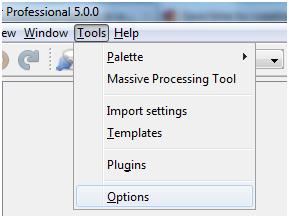

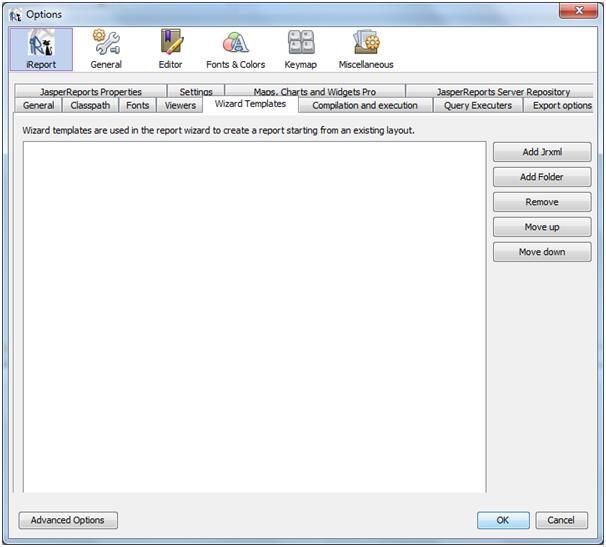

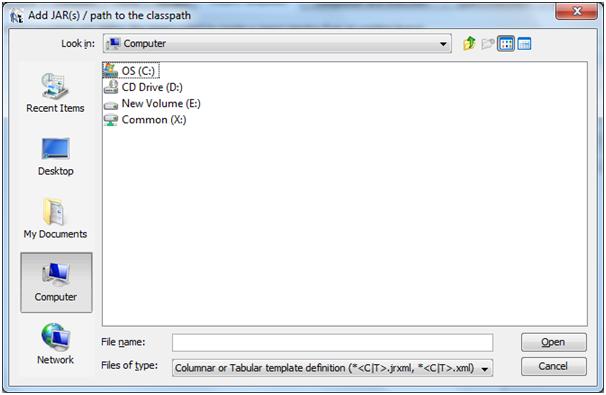

Reports are designed separately using the iReport or Jaspersoft Studio tools, then exported in JRXML to be bundled with the application.

Level 2: Embedding of Managed Interactive Reports

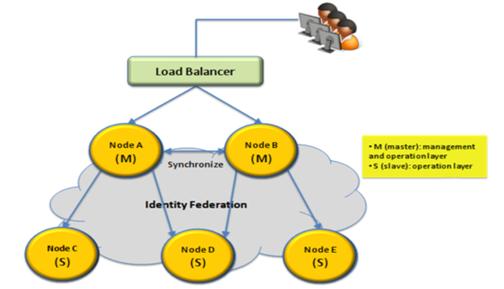

The Jaspersoft embedded BI solution for level 2 relies on web service APIs to access reports on the server. The end-user application has its own user interface, but when it wants to list or run a report, it makes calls to the server.

Web services are a set of APIs that allow the calling application to make requests for information that is processed or stored on the server. Web services use the HTTP protocol to exchange XML and JSON objects over the internet.

For example, the calling application can request a URL that represents a search of the repository, and the server responds with XML objects for each report that matched the search.

JasperReports Server implements both REST and SOAP web services, but this document focuses only on the more modern REST services.

Level 3: Embedding of Highly Interactive Reports and Dashboards

The Jaspersoft embedded BI solution for level 3 is to use iframes to display reports and dashboards that are being served directly from JasperReports Server. JasperReports Server has a web interface that creates interactive reports and dashboards in web pages in the user’s browser.

In level 3, the host application uses iframes to display the server’s pages inside of its own user interface. The embedding application only provides the placeholder for the iframe, and then users interact directly with JasperReports server within that iframe.

An iframe is an HTML element that creates an inline frame for external content. Therefore, this solution applies to a wide range of applications that are themselves web applications accessed through a browser or to applications that can display HTML in their user interface. In either case, JasperReports Server provides mechanism to customize the look and feel of its content so that the contents of the iframe blend seamlessly with the appearance of the host application.

Level 4: Embedding of Self-Service Reporting and Ad-hoc views

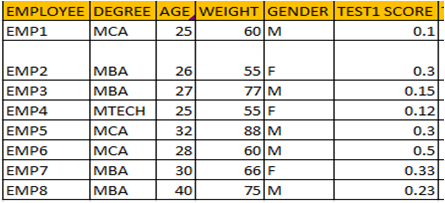

The Jaspersoft embedded BI solution for level 4 is to use iframes again and provide access to the Ad-hoc editor where users can access data presented through a Domain. The powerful in-memory engine of the Ad-hoc editor allows users to explore their data dynamically, by dragging and dropping columns and rows, changing summary functions, pivoting, and drilling down to detailed tables.

As in level 3, the host application uses iframes to display the server’s pages inside of its own user interface, and then users interact directly with JasperReports Server within that iframe.

Level 5: Embedding of Advanced Analytics

The Jaspersoft embedded BI solution for level 5 provides several tools for working with OLAP cubes:

1. Jaspersoft ETL (Extract, Transform, and Load) allows you to prepare and import large volumes of data automatically from any number of sources into any number of data structures, including optimized relational databases used to define OLAP cubes.

2. The schema workbench lets you define an OLAP schema for data in a ROLAP cube.

3. OLAP views combine a connection to the ROLAP cube with an MDX query to give access to the multidimensional data.

This data analysis tool lets users slice, dice, and drill down through the cubes. Advanced users can edit the MDX query to modify their view of the cube.